A Guide to Test Retest Reliability

Nov 4, 2025

Test-retest reliability is all about consistency over time. It's the bedrock of any trustworthy data, ensuring that a change in score reflects a genuine change in the person being assessed—not just a wobble in the measurement tool itself. Without it, the results are unpredictable, and frankly, meaningless.

For anyone in research, clinical practice, or education, understanding this concept isn't just academic—it's essential for making sound decisions. This guide will provide actionable insights and practical examples to help you master it.

Understanding Test-Retest Reliability

Think about stepping on your bathroom scale. On Monday, it says 150 pounds. You step on it again a minute later, just to be sure, and now it reads 155 pounds. The next day? 148 pounds. You'd quickly lose faith in that scale, right? Its measurements are all over the place.

Test-retest reliability applies this very same idea to psychological and educational assessments. It asks a simple but vital question: if we give the same test to the same person under the same conditions at two different times, will we get similar results?

When the answer is a resounding "yes," the test is considered reliable. This consistency is absolutely essential in fields from clinical diagnostics to academic evaluations. For example, these principles are fundamental in physical performance testing, where objective data has to accurately reflect an individual's true abilities.

Why Consistency Is So Crucial

Reliability isn't just a technicality; it's the foundation of your confidence in the data. Without it, you can't be sure if a shift in a score—like a student's reading level or a patient's cognitive function—is real progress or just random noise. This is particularly important for any kind of cognitive assessment, where decisions about care and educational support hang on the accuracy of the data.

Actionable Insight: A reliable test provides a stable baseline. It allows you to track progress, evaluate interventions, and make informed decisions with confidence, knowing your measurement tool is dependable.

This stability is a non-negotiable benchmark for any new assessment tool. Take the CA Method (CAM), a behavioural assessment tool. A pilot study found its test-retest reliability coefficient was above the 0.7 threshold, which meets the established rules for what's considered acceptable reliability. This tells us the method is stable enough for repeated use.

Ultimately, getting a solid handle on test-retest reliability is the first step toward choosing—and creating—assessments that deliver meaningful and dependable results, every single time.

How to Calculate Test Retest Reliability

Figuring out test-retest reliability might sound intimidating, but it’s really a straightforward process. Think of it as a quality check for your assessment tool.

You simply administer a test, wait a bit, give the same test again, and then compare the two sets of scores to see how well they match up. This gives you a single number—the reliability coefficient—that tells you just how consistent your tool is.

The whole thing boils down to a few key steps. First, you need to give your assessment to a good sample of participants. Then, you decide on the right amount of time to wait before the retest—it’s a balancing act. You need enough time to pass so they don't just remember their answers, but not so much time that the skill you're measuring has actually changed. Finally, you crunch the numbers to get your score.

Choosing Your Statistical Tool

When it’s time to run the numbers, two main statistical methods usually come into play: Pearson's correlation coefficient (r) and the Intraclass Correlation Coefficient (ICC). They both measure consistency, but they’re cut out for slightly different jobs.

Pearson's Correlation (r): This is the more basic of the two. It looks at the strength and direction of the relationship between the two sets of scores. It's perfect for a quick check to see if people who scored high the first time also tended to score high the second time.

Intraclass Correlation Coefficient (ICC): The ICC is a bit more sophisticated and often the go-to choice for researchers. It doesn't just look at the relationship; it also checks for systematic differences. For example, did everyone score five points higher on the retest? The ICC catches that, which makes it incredibly valuable in clinical settings where you need to know the scores are in absolute agreement.

No matter which tool you use, you’re aiming for a coefficient that’s as close to 1.0 as possible. A high score means the results from the first and second tests were nearly identical, which is exactly what you want to see for a reliable assessment.

A Practical Example Step by Step

Let’s walk through a real-world scenario. Imagine you’ve built a new digital tool to measure working memory, much like one you'd find in a cognitive assessment online. You need to be sure it's reliable before you roll it out.

Administer the First Test: You bring in a group of 50 adults on a Monday morning and have them complete your working memory test. You meticulously record every score.

Wait for the Right Interval: You decide that two weeks is the sweet spot. It’s long enough that they probably won't recall their specific answers (which would skew the results), but it’s short enough that their genuine working memory skills haven’t had time to change.

Administer the Retest: Exactly two weeks later, you have the same 50 adults take the exact same test, under the exact same conditions.

Calculate the Coefficient: Now you have two scores for every single participant. You plug this data into your statistical software and calculate the ICC. The software spits out a number: 0.88.

Actionable Insight: An ICC of 0.88 is fantastic—that falls into the 'good' to 'excellent' range. This single number provides powerful proof that your new working memory tool gives consistent, repeatable results. It's a trustworthy measure for tracking cognitive function over time.

Interpreting Your Reliability Scores

So, you've run the numbers and now you have a reliability coefficient, a figure usually sitting somewhere between 0.0 and 1.0. What does that actually tell you? Think of it less as an abstract statistic and more as a direct report card on your assessment's consistency.

A score is never just a score. It’s a measure of trust. The closer that number inches toward 1.0, the more you can depend on the tool. Conversely, a score hovering near 0.0 suggests the results are shaky, likely swayed by random chance and error. Getting a feel for this spectrum is crucial for making smart choices about the assessments you use.

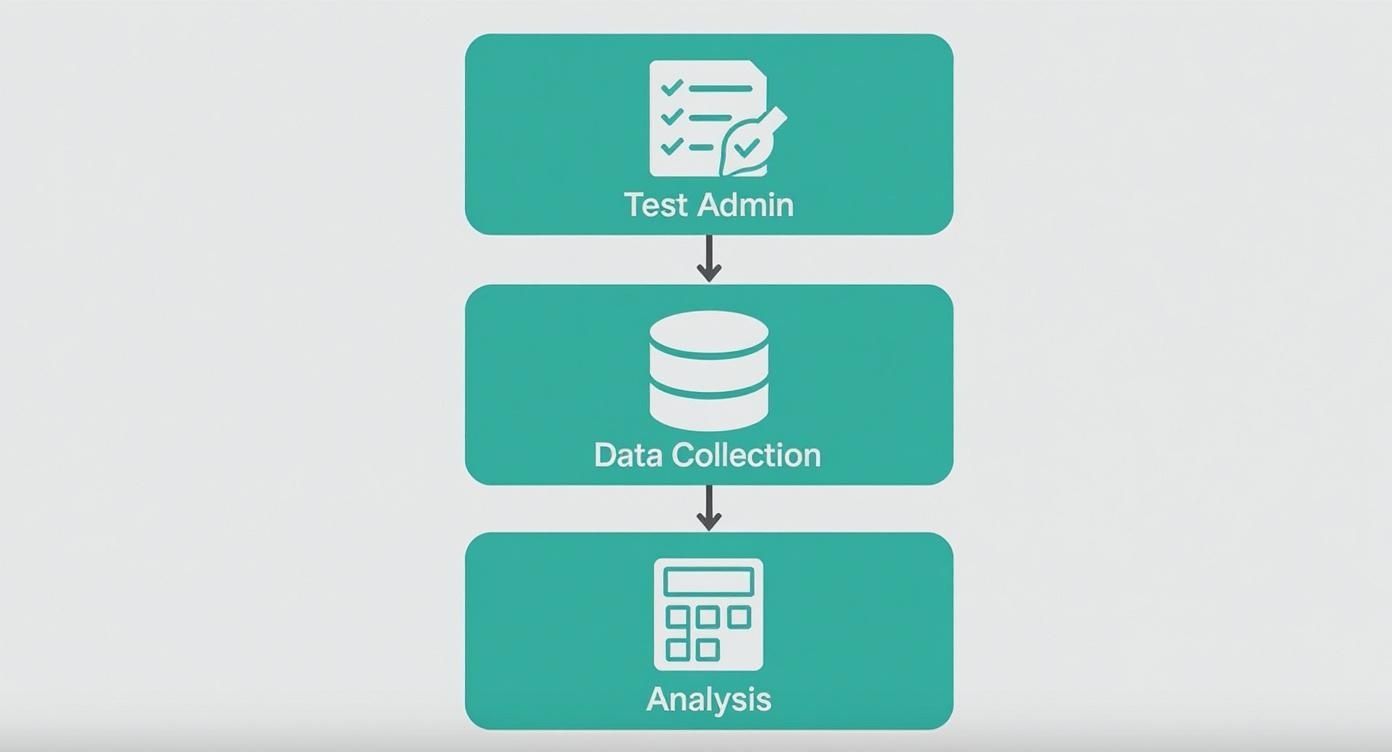

This infographic neatly lays out the journey from the initial test to the final reliability score.

As you can see, every step is built on the one before it, all to ensure that final number is a true reflection of the test's stability over time.

Benchmarks for Reliability

While there isn't one single "perfect" score, well-established guidelines give us a common language to talk about the quality of test retest reliability. These benchmarks help us classify the strength of our assessments.

Excellent Reliability: A coefficient above 0.90 is the gold standard. You'll want this level of precision for high-stakes decisions, like making a clinical diagnosis or deciding if someone qualifies for a specialized program.

Good Reliability: A score between 0.80 and 0.89 points to a strong, dependable test. Many of the most trusted psychological and educational assessments land in this range.

Acceptable Reliability: A coefficient from 0.70 to 0.79 is often good enough for many research projects or low-stakes assessments. It shows a decent level of consistency.

Poor Reliability: Any score dipping below 0.70 should be a major red flag. This tells you that a large chunk of a person's score is just random noise, not a true measure of their ability. The results are simply not trustworthy.

To give you a quick reference, here’s a breakdown of how to interpret these scores in the real world.

Interpreting Test Retest Reliability Coefficients

Reliability Coefficient (ICC or r) | Interpretation | Example Use Case |

|---|---|---|

Above 0.90 | Excellent. Results are highly consistent and stable. | High-stakes clinical diagnostic tools (e.g., assessing cognitive impairment). |

0.80 – 0.89 | Good. Strong reliability suitable for most research and evaluation. | Standardized academic achievement tests or well-validated personality inventories. |

0.70 – 0.79 | Acceptable. Moderate reliability, adequate for group-level analysis or low-stakes screening. | A new questionnaire measuring employee satisfaction in a large company. |

Below 0.70 | Poor. Results are too influenced by error to be considered reliable. | An informal, quickly-made quiz for a team-building exercise. Not for decisions. |

Ultimately, these numbers give us the confidence to stand behind our assessment tools.

Practical Example: Context is everything. A test with a reliability of 0.75 might be perfectly fine for an informal survey about team morale, but it would be completely unacceptable for a high-stakes clinical instrument like the Montreal Cognitive Assessment.

The level of precision needed in clinical settings is incredibly high. You can learn more by reviewing our detailed Montreal Cognitive Assessment instructions.

Making Informed Decisions with Your Score

Your reliability coefficient isn't just a number to jot down in a report—it's your guide for action. It tells you if an assessment is truly up to the task you've set for it.

For instance, a researcher developing a new questionnaire to track daily mood swings might be perfectly happy with a coefficient of 0.82. But a neuropsychologist using a test to monitor a patient's cognitive recovery after a serious brain injury? They would demand a score of 0.95 or higher.

The seriousness of the consequences dictates the level of test retest reliability you need. By interpreting your score within its specific context, you make sure you’re always using the right tool for the job.

Key Factors That Influence Reliability

A test's consistency isn't set in stone. In fact, several key factors can either bolster or undermine its test retest reliability. If you're designing or interpreting an assessment, you need to understand these variables, as they can introduce "noise" that hides the real results. Ignoring them is like trying to measure a plant's height while the ground is constantly shifting beneath it.

One of the biggest factors is the time interval between the first test and the retest. It’s a bit of a tightrope walk.

If the gap is too short, people might just remember their previous answers, which will artificially pump up the reliability score. This is called the memory effect. But if the interval is too long, the actual trait or skill you're measuring might have genuinely changed. A student’s knowledge can improve with studying, or a patient's symptoms might shift with treatment. The sweet spot is finding a gap long enough for memory to fade but short enough that the trait itself is still stable.

Practice Effects and Trait Stability

Another major player is the practice effect. Sometimes, people simply get better on the second go because they’re more familiar with the test format or the types of questions. It’s not that their skill has improved; they’ve just learned how to take the test better. This is a common hurdle, especially when assessing cognitive functions.

Practical Example: The very nature of what you are measuring plays a massive role. A test measuring a stable trait like general intelligence should show high reliability over years. In contrast, a questionnaire assessing a fleeting emotional state, like mood, would naturally have lower test-retest reliability over just a few hours.

The stability of the trait itself is crucial. Think about it: would you expect the same consistency from measuring someone’s height versus their hunger level? Height is a stable characteristic that won't change in a week, giving you high reliability. Hunger, on the other hand, is a temporary state that changes constantly, making it a poor candidate for long-term test retest reliability.

Real World Influences on Reliability

Finally, even subtle changes in the testing environment can throw off the scores. Little things like different instructions from administrators, varying noise levels, or even the time of day can create inconsistencies.

Let's look at a real-world example. The California Verbal Learning Test (CVLT) is a common tool for assessing memory. A 2017 study found its long-term reliability over one year was moderate, with scores ranging from .50 to .72. This variation was influenced by things like practice effects and the participants' own backgrounds. For instance, people with higher education levels showed less improvement on the retest, which skewed the overall reliability calculation. This just goes to show how critical it is to consider these outside variables when you interpret test results. You can discover more about these CVLT findings and their implications.

For assessments that depend on self-reporting, like a concussion symptom questionnaire, keeping these factors in check is absolutely essential for tracking recovery accurately. By carefully managing the time between tests, accounting for practice effects, and standardizing the environment, you can minimize error and get results you can truly trust.

How to Improve Your Assessment Reliability

Boosting the consistency of your assessments isn't about a massive overhaul. It's about taking a series of deliberate, practical steps to cut down on ambiguity and environmental noise.

When you focus on standardizing the experience, you can have much more confidence in the results. You'll know the scores reflect a person's true ability, not just some quirk in the procedure. This is how you strengthen the very foundation of your test retest reliability.

The ultimate goal is simple: create an identical experience for every single person, every single time. Even tiny inconsistencies can pile up, introducing errors that chip away at the dependability of your measurements.

Key Strategies for Better Reliability

Putting a few core principles into practice can make a world of difference. It starts with refining the assessment itself and then standardizing how it's delivered.

Write Clear, Unambiguous Questions: Vague language is the enemy of reliability. Think about a question like, "How active are you?" That's wide open to interpretation. A much better version would be, "How many days per week do you engage in at least 30 minutes of moderate physical activity?" Specificity closes the door on guesswork.

Standardize Instructions and Scoring: Every participant needs to get the exact same instructions, delivered in the same way. The same goes for your scoring criteria—they have to be objective and crystal clear. If an administrator is scoring a task, they need rigorous training to apply the rules consistently and keep their own judgment out of it.

Control the Testing Environment: Do everything you can to minimize external distractions. A quiet, well-lit room without interruptions helps ensure that performance isn't being dragged down by outside factors. This is especially vital for assessments that require high levels of focus; you can find more tips in our guide on how to improve focus and concentration.

Actionable Insight: By meticulously controlling these variables—from the phrasing of a question to the background noise in a room—you systematically root out sources of error. This disciplined approach is what turns a good assessment into a truly reliable one.

These steps give you a clear path toward building assessment tools that are more robust and trustworthy. If you'd like more expert guidance on creating and implementing reliable cognitive assessments, our team is here to help. Contact us for a consultation, or subscribe to our newsletter for the latest insights delivered to your inbox.

Answering Your Questions

Even after wrapping your head around test retest reliability, a few practical questions almost always pop up. Let's tackle some of the most common ones to clear up any lingering confusion.

What's the Ideal Time Between Tests?

Honestly, the perfect interval depends entirely on what you’re trying to measure. There's no one-size-fits-all answer here.

Practical Example: For a deeply ingrained trait like IQ, you could wait a year or even longer without skewing the results. But for something that shifts more easily, like a person's mood or anxiety levels, you'd want to retest within a few days. Otherwise, you're not measuring consistency—you're just tracking genuine life changes.

Are Reliability and Validity the Same Thing?

Not at all, and it's a critical difference to understand. Imagine you have a kitchen scale you use for baking.

Practical Example: A scale that consistently tells you a one-kilogram bag of flour weighs exactly 1.2 kilograms is reliable—it gives you the same incorrect answer every single time. But it is absolutely not valid, because it isn't accurate.

Reliability is all about consistency. Validity is about accuracy. A test must be reliable for it to have any chance of being valid, but just because a test is reliable doesn't automatically make it valid.

What Are Other Types of Reliability?

While test retest reliability zeroes in on consistency over time, other forms measure different kinds of consistency that are just as important:

Inter-Rater Reliability: This ensures that different people administering or scoring the same test come to similar conclusions. It removes subjective bias.

Internal Consistency: This checks that different items on the same test, which are all supposed to be measuring the same thing, actually produce similar results.

Getting familiar with these different angles gives you a much fuller picture of an assessment's overall quality and trustworthiness.

At Orange Neurosciences, we build our cognitive assessments on a foundation of the highest standards for both reliability and validity. Our goal is to give you data you can truly trust. Discover our evidence-based platform and see for yourself how precise measurement can lead to better outcomes.

Orange Neurosciences' Cognitive Skills Assessments (CSA) are intended as an aid for assessing the cognitive well-being of an individual. In a clinical setting, the CSA results (when interpreted by a qualified healthcare provider) may be used as an aid in determining whether further cognitive evaluation is needed. Orange Neurosciences' brain training programs are designed to promote and encourage overall cognitive health. Orange Neurosciences does not offer any medical diagnosis or treatment of any medical disease or condition. Orange Neurosciences products may also be used for research purposes for any range of cognition-related assessments. If used for research purposes, all use of the product must comply with the appropriate human subjects' procedures as they exist within the researcher's institution and will be the researcher's responsibility. All such human subject protections shall be under the provisions of all applicable sections of the Code of Federal Regulations.

© 2025 by Orange Neurosciences Corporation